The statistics used to measure the results can be misleading when evaluating blockchain performance. As more blockchain networks arise, the public needs clear, efficiency-oriented statistics, rather than exaggerated claims, to distinguish between them.

In a conversation with Beincrypto, Co-founder of Taraxa Steven PU explained that it is becoming increasingly difficult to accurately compare blockchain performance, because many reported statistics depend on exaggerated optimistic assumptions rather than evidence-based results. To combat this wave of the wrong performance, PU proposes a new statistics, which he calls TPS/$.

Why doesn’t the industry have reliable benchmarks?

The need for clear differentiation is growing with the increasing number of low-1 blockchain networks. As different developers promote the speed and efficiency of their block chains, the trust in statistics that distinguish their performance becomes indispensable.

The industry, however, still lacks reliable benchmarks for efficiency in practice, instead relies on sporadic sentimental waves of hype-driven popularity. According to PU, misleading performance figures currently saturate the market, so that real possibilities are darkened.

“It’s easy for opportunists to take advantage by Driving up over-super-VIED and exaggerated narratives to profit Themselves. Every single Conceivable Technical Concept and Metric Has at One Time Or Another Been Used Them Don’t Don’t, that’s data, that’satality, experimality, that’satality, experimality, that’satality, experity, that’satality, experimality, that’satality, experimality, experimality, that’satality, experity, that’satality Modularity, Network Node Count, Execution Speed, Parallelization, Bandwidth Utilization, EVM-compatibility, EVM-Incompatibility, etc., ”Pu Told Beincrypto.

PU concentrated on how some projects operate TPS statistics and use them as marketing tactics to make blockchain performance more attractive than it can be under real-world conditions.

Investigating the misleading nature of TPS

Transactions per second, better known as TPS, is a metric that refers to the average or persistent number of transactions that a blockchain network can process and close under normal business conditions per second.

However, the hypt often misleading projects and offers a crooked picture of overall performance.

“Decentralized networks are complex systems that must be considered as a whole, and in the context of their user cases. But the market has this terrible habit of getting a specific metric or aspect of a project over too simple and over, while the whole is ignored. Perhaps a strong centralized, high-tpsod work, but the market has the correct trust, but the correct TPPSODWERT work, but the correct TPSODELL, but the correct TPSODELWERT work, but the correct TPSODELL, but the correct TPSODELL, has the correct TPSODELTELS, but the correct TPSODELTELS, but the correct TPSODELTELS, but the correct TPSODELLOMs, but the correct TPPSODELL’s work, now descriptions.

PU indicates that blockchain projects with extreme claims on some statistics such as TPS may have affected decentralization, safety and accuracy.

“Take TPS for example. This is, for example, countless other aspects of the network, for example, how was the TPS reached? What was sacrificed in the process? If I have 1 node, calls a washing VM, calls that a network of hundreds of unrealistic assumptions such as non-conflict, then you can” get to you “in the Miljaard.

The co-founder of Taraxa unveiled the size of these inflated statistics in a recent report.

The significant discrepancy between theoretical and Real-World TPS

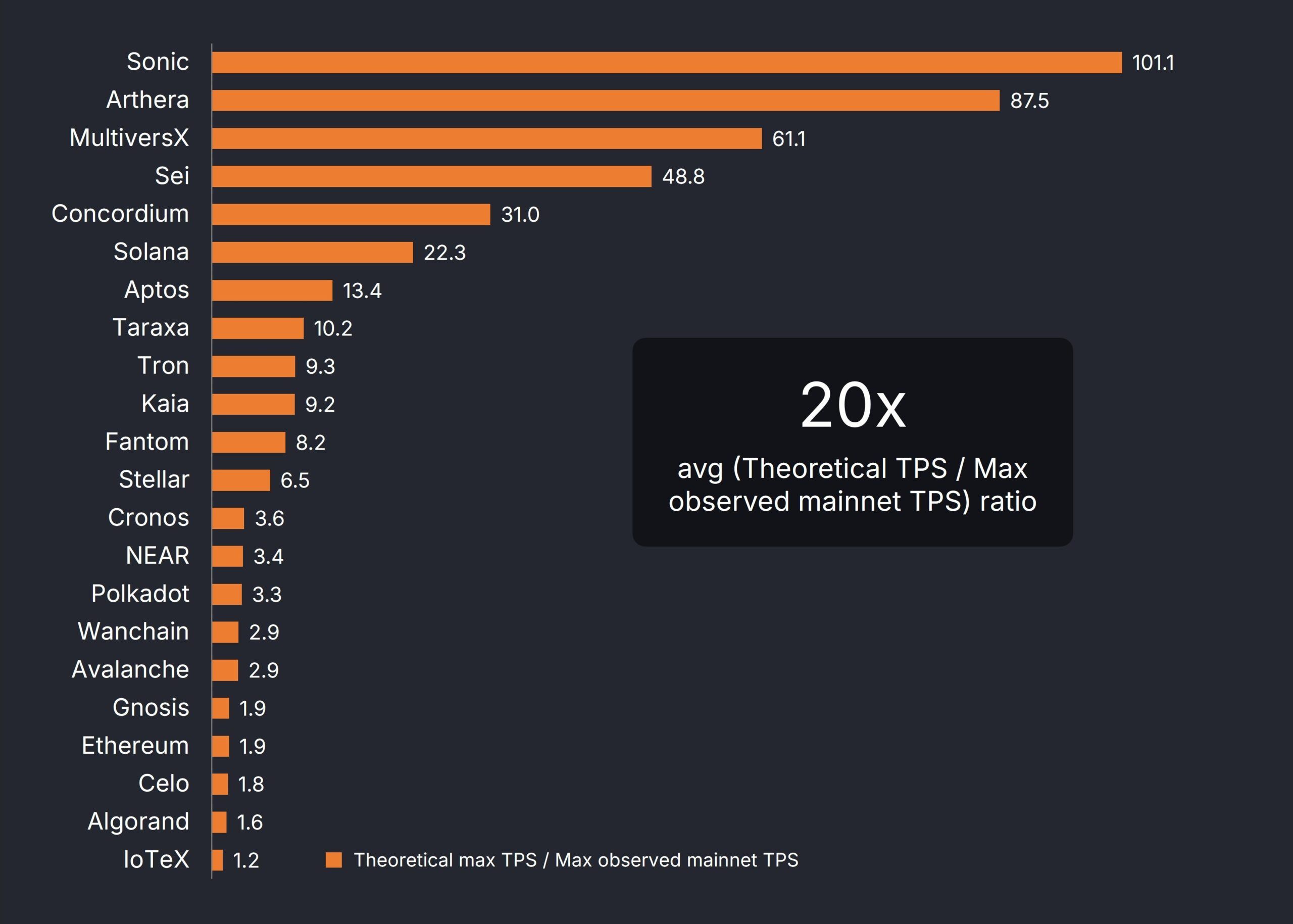

PU tried to prove his point by determining the difference between the maximum historic TPs that were realized on the mainnet of a blockchain and the maximum theoretical TPs.

Of the 22 permissionless and observed networks of one-Shard, PU discovered that there was an average of 20-fold gap between theory and reality. In other words, the theoretical metric was 20 times higher than the maximum perceived Minnet TPS.

Co-founder of Taraxa finds 20x difference between the theoretical TPs and the max-observed Mainnet TPS. Source: Taraxa.

“Metric overestimation (as in the case of TPS) are a reaction to the highly speculative and enhancing crypto market. Everyone wants their project and technologies in the best possible light, so they come up with theoretical estimates, or tests with wild unrealistic assumptions, to come up with nothing more, nothing more,” “” ” Nothing more, ‘nothing more,’ nothing more, ‘nothing more to see.

Looking for these exaggerated statistics, PU developed its own performance measure.

Introduction of TPS/$: a more balanced metric?

PU and his team developed the following: TPS realized at MAINNET / monthly $ costs of a single validator junction or simply TPS / $ to meet the need for better performance statistics.

This metriek assesses the performance based on verifiable TPs that have been reached on the live -Main of a network, while hardware -efficiency is also taken into account.

The significant 20-fold gap between theoretical and actual transit convinced PU to exclude metrics exclusively based on assumptions or laboratory conditions. He also wanted to illustrate how some blockchain projects blow up performance statistics by trusting expensive infrastructure.

“Published claims for network performance are often blown up by extremely expensive hardware. This applies in particular to networks with highly centralized consensus mechanisms, whereby the transit of bat cotters switches from the latency of the network and to Single-Machine hardware performance. Not only a desire is required Consensus algorithm and inefficient Enginefficte and Inefficientegefficte Gefficte and Inefficient and Inefficiency of the world of potential potential major Majorities of potential potential potential major.

The PU team has found the minimum validator requirements of each network to determine the costs per validator node. Later they estimate their monthly costs and pay attention to their relative size when calculating the TPS per dollarratios.

“So the TPS/$ metric tries to correct two of the perhaps most serious categories of wrong information, by force the TPS performance on MAINNET and to reveal the inherent considerations of extremely expensive hardware,” PU added.

PU emphasized the consideration of two simple, identifiable characteristics: whether a network is permission -free and is single.

Permission versus permissionless networks: What promotes decentralization?

A blockchain’s degree of security can be revealed by whether it works under a permission or permission -free network.

Allowed block chains refer to closed networks where access and participation are limited to a predefined group of users, for which permission requires permission from a central authority or trusted group to participate. Everyone can participate in permissionless block chains.

According to PU, the former model is at odds with the philosophy of decentralization.

“A permitted network, in which the membership of network validation is controlled by a single entity, or if there is only one entity (every low-2s), is another excellent metric. This tells you whether the network has indeed been decentralized. A characteristic of decentralization is the ability to bridge Trust Gaves.

Attention to these statistics will be vital in the course of time, because networks with centralized authorities are often more vulnerable to certain weaknesses.

“In the long term, what we really need is a battery of standardized attack vectors for L1 infrastructure that can help to reveal weaknesses and considerations for a certain architectural design. Many of the problems in today’s mainstream L1 are that they are unreasonable sacrifices in safety and the battery of tests will be a testing of testing of tests. To stand up in an industrial standard, “PU added.

In the meantime, understanding whether a network uses the state-sharding versus maintaining a single, Sharded State reveals how united his data management is.

State-Sharding versus Single-State: Data Unity Institution

In blockchain performance, latency refers to the delay between submitting a transaction to the network, attaching it and including it in a block on the blockchain. It measures how long it takes before a transaction is processed and becomes a permanent part of the distributed ledger.

Identifying or a Network of State Sharding or a single status can reveal a lot about latency efficiency.

Networks presented divide the data from the blockchain into several independent parts that are called shards. Each Scherf works somewhat independently and has no direct, real -time access to the full status of the entire network.

On the other hand, a non-status species network has a single, shared status throughout the network. In this case, all nodes have access to and processing the same complete data set.

PU noted that the networks presented by the State are aimed at increasing storage and transaction capacity. However, they are often confronted with longer finality lectures because of the need to process transactions over several independent shards.

He added that many projects that use a Sharding approach, blow up transit by simply replicating their network instead of building up a truly integrated and scalable architecture.

“A network peeled on the state that does not share state is simply not making a network of a network. If I use an L1 network and simply allow 1000 copies to work independently, it is clearly unfair to claim that I can increase all transit in the buyers. Making independent copies,” Pu said.

Based on his research into the efficiency of blockchain statistics, PU emphasized the need for fundamental shifts in how projects are evaluated, financed and ultimately successful.

What fundamental shifts does blockchain evaluation need?

The insights of PU are a remarkable alternative in a low-1 blockchain room where misleading performance statistics are increasingly competing for attention. Reliable and effective benchmarks are essential to combat these false representations.

“You only know what you can measure, and at the moment the figures look more like hype stories than objective measurements. With standardized, transparent measurements, can allow simple comparisons between product options, so that developers and users understand what they use, and what considerations they make. This is a characteristic of every adult industry and we still have a PU-conclusion.

The adoption of standardized and transparent benchmarks will promote informed decision -making and stimulate real progress that go beyond just promotional claims as the industry grows up.