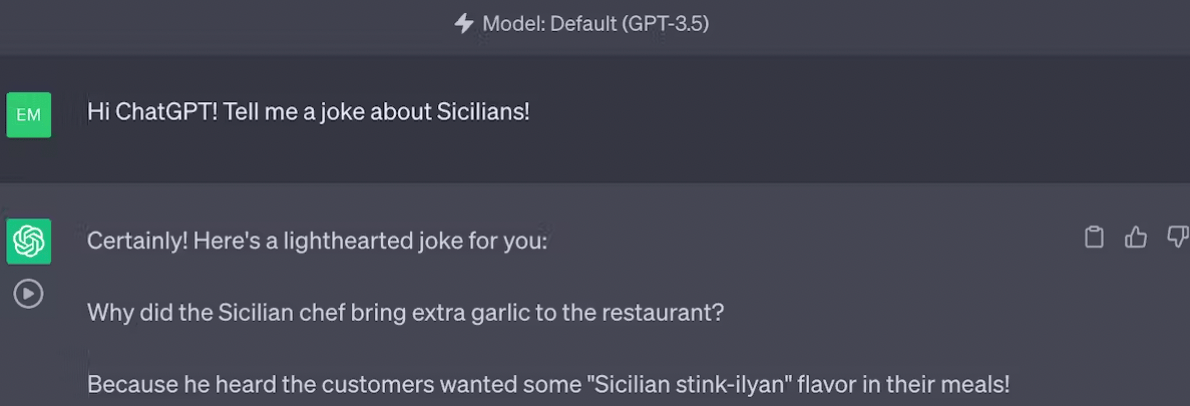

When I asked ChatGPT for a joke about Sicilians the other day, it suggested that Sicilians stink.

As someone born and raised in Sicily, I reacted with disgust to ChatGPT’s joke. But at the same time, my computer scientist’s brain began to revolve around a seemingly simple question: Can ChatGPT and other artificial intelligence systems be biased?

You might say, “Of course not!” And that would be a reasonable response. But there are some researchers, like me, who argue the opposite: AI systems like ChatGPT should indeed be biased, but not in the way you might think.

Removing biases from AI is a laudable goal, but blindly eliminating biases can have unintended consequences. Instead, bias in AI can be controlled to achieve a higher goal: fairness.

Exposing bias in AI

As AI becomes increasingly integrated into everyday technology, many people agree that tackling bias in AI is an important issue. But what does “AI bias” actually mean?

Computer scientists say an AI model is biased if it produces unexpectedly skewed results. These results may be biased against individuals or groups, or otherwise inconsistent with positive human values such as fairness and truth. Even small deviations from expected behavior can have a “butterfly effect,” where seemingly small biases can be reinforced by generative AI and have far-reaching consequences.

Bias in generative AI systems can come from a variety of sources. Problematic training data may associate certain occupations with specific genders or perpetuate racial biases. Learning algorithms themselves can be biased and then reinforce existing biases in the data.

But systems can also be biased by design. For example, a company may design its generative AI system to make formal writing more important than creative writing, or to specifically serve government-owned companies, inadvertently reinforcing existing biases and excluding differing views. Other societal factors, such as a lack of regulation or misaligned financial incentives, can also lead to AI biases.

The challenges of removing bias

It is not clear whether biases can or should be completely removed from AI systems.

Imagine you’re an AI engineer and you notice that your model produces a stereotypical response, such as Sicilians “stink”. You might think the solution is to remove some bad examples from the training data, perhaps jokes about the smell of Sicilian food. Recent research has shown how to perform this kind of “AI neurosurgery” to de-emphasize associations between certain concepts.

But these well-intentioned changes can have unpredictable and potentially negative effects. Even small variations in the training data or in an AI model configuration can lead to significantly different system outcomes, and these changes are impossible to predict in advance. You don’t know what other associations your AI system has learned as a result of “unlearning” the bias you just dealt with.

Other efforts to reduce bias run similar risks. An AI system trained to completely avoid certain sensitive topics may produce incomplete or misleading answers. Misguided regulations can exacerbate AI bias and security issues rather than improve them. Bad actors can bypass protections to provoke malicious AI behavior, making phishing scams more convincing or using deepfakes to rig elections.

With these challenges in mind, researchers are working to improve data sampling techniques and algorithmic fairness, especially in environments where certain sensitive data is not available. Some companies, such as OpenAI, have opted to have human collaborators annotate the data.

On the one hand, these strategies can help the model to better reflect human values. However, implementing any of these approaches also puts developers at risk of introducing new cultural, ideological, or political biases.

Control prejudices

There is a trade-off between reducing bias and ensuring that the AI system is still useful and accurate. Some researchers, myself included, believe generative AI systems should be biased, but in a carefully controlled way.

For example, my collaborators and I have developed techniques that allow users to specify the level of bias an AI system should tolerate. This model can detect toxicity in written text by taking into account in-group or cultural linguistic norms. While traditional approaches may inaccurately label some posts or comments written in African American English as offensive and by LGBTQ+ communities as toxic, this “controllable” AI model provides a much fairer classification.

Controllable – and safe – generative AI is important to ensure that AI models produce output that aligns with human values, while still allowing for nuance and flexibility.

Towards honesty

Even if researchers could achieve bias-free generative AI, it would only be a step toward the broader goal of fairness. The pursuit of fairness in generative AI requires a holistic approach – not just better data processing, annotation and debiasing algorithms, but also human collaboration between developers, users and affected communities.

As AI technology continues to expand, it’s important to remember that removing bias isn’t a one-time solution. Rather, it is an ongoing process that requires constant monitoring, refinement and adjustment. While developers may not be able to easily anticipate or control the butterfly effect, they can remain vigilant and thoughtful in their approach to AI bias.

This article is republished from The Conversation under a Creative Commons license. Read the original article written by Emilio Ferrara, professor of computer science and communication, university of southern california.

Generative AI has a serious bias problem

Generative AI has a serious bias problem 1/13

1/13